Q: Is a Raspberry Pi 4 (4Gb) powerful enough to run Ardour?

A: Yes

I set out to discover how it would cope with the modest requirements of this excellent digital audio workstation.

A word of caution: running the Raspberry PI 4 with the official case is not recommended. Even with trivial non-audio operations the whole thing gets so hot that it quickly throttles down to a grind.

For that reason, I ordered an acrylic case with heatsinks and fan which turned out to be the perfect environment for the PI. Not only it keeps everything cool but looks so too!

I installed Raspbian Buster and downloaded the latest ardour sources. If you use are going to use this software please make sure you make a contribution – there’s a lot of work in there that should be supported!

The list of dependencies to install includes:

libaubio-dev libboost-dev libcppunit-dev libcurl4-openssl-dev libfftw3-dev libglib2.0-dev libglibmm-2.4-dev libgtkmm-2.4-dev python-isodate libjpeg-9 libarchive-dev libart-2.0-2 liblo-dev libsamplerate0-dev libsndfile1-dev libusb-1.0-0-dev libxml++2.6-dev liblilv-dev liblrdf0-dev lv2-dev libpangomm-1.4-dev libreadline-dev librubberband-dev libserd-dev libsord-dev libsratom-dev libsuil-dev libtag1-dev vamp-plugin-sdk libasound2-dev libudev-dev libjack-dev

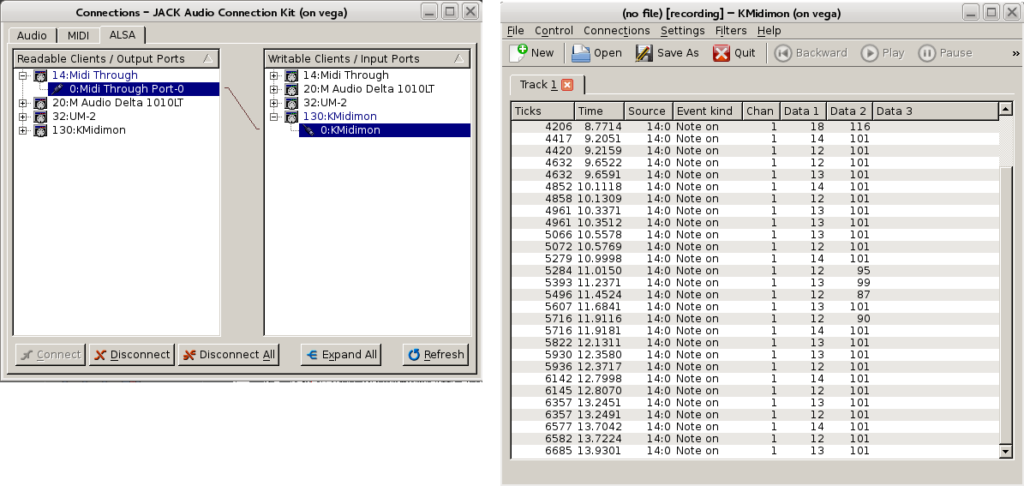

The Raspberry is currently installed on my Rehearsal/Live rack and is connected to a Behringer u-phoria umc 1820 audio/midi interface (8/10 In/Outs, 18/20 In/Outs with ADAT).

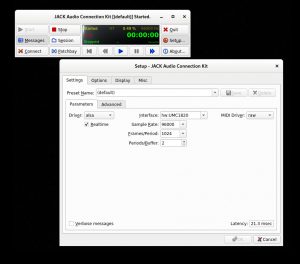

Jack is configured to run at 96KHz. The 21.3 millisecond measured latency is sub-optimal but as I normally monitor directly, it does not matter to me.

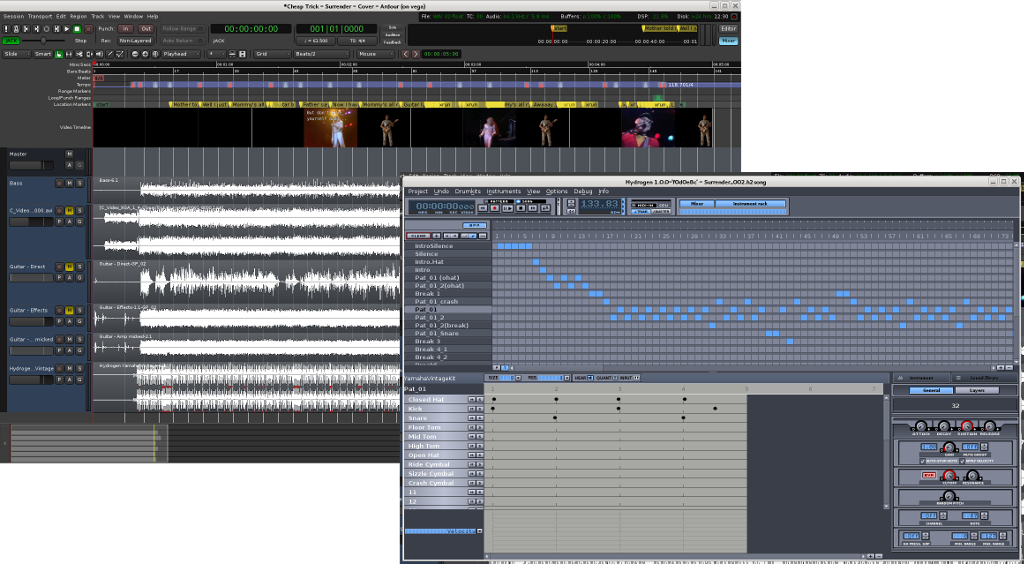

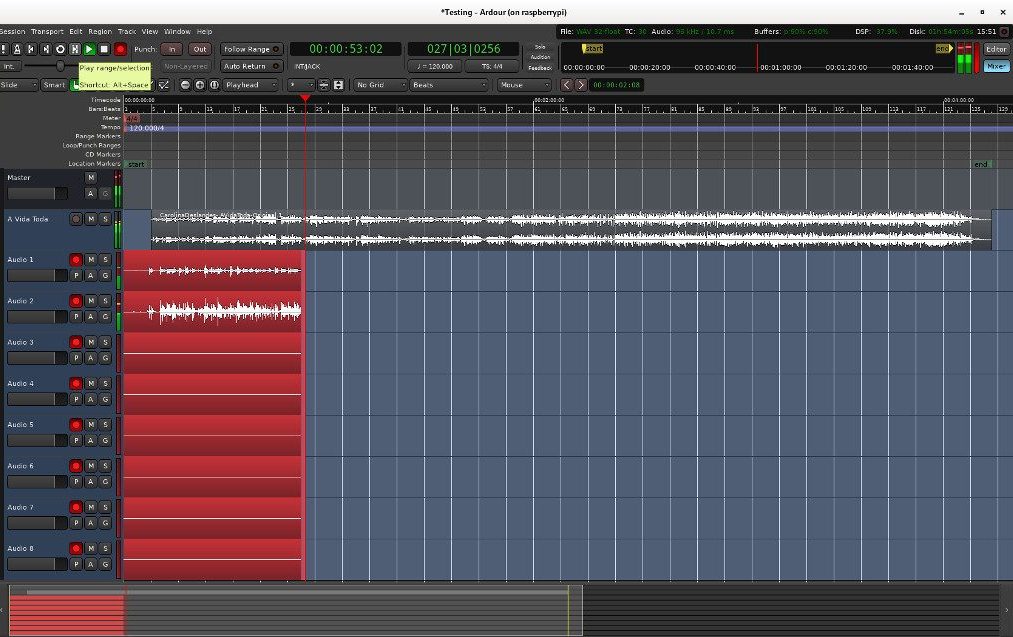

In my tests I was able to consistently record 8 tracks without a single xrun, although I was not using any plugins. Ardour was running remotely using X11 display forwarding:

In retrospective, I doubt I have a valid use case for running ardour in this setup. In the studio, I will continue to use my desktop computer to run ardour, whether trough my old dependable M-Audio Delta 1010LT PCI card, or with a new setup based on a Soundcraft Ui24R mixer.

For live recording, having to carry a laptop (or alternatively a monitor + keyboard + mouse combination), defeats the purpose of having it all inside a tiny device, specially if you need to record multiple inputs simultaneously, which anyway requires a sizeable audio interface. In this case a simpler recording solution using ecasound would be more manageable, not least because you could control it from a tablet running Termux. Again, in my case I would probably use the Soundcraft Ui24R to multitrack-record every channel and later transfer everything to the studio computer for processing.

Disclaimer: I am not in way associated with the products linked or referred to in this article.